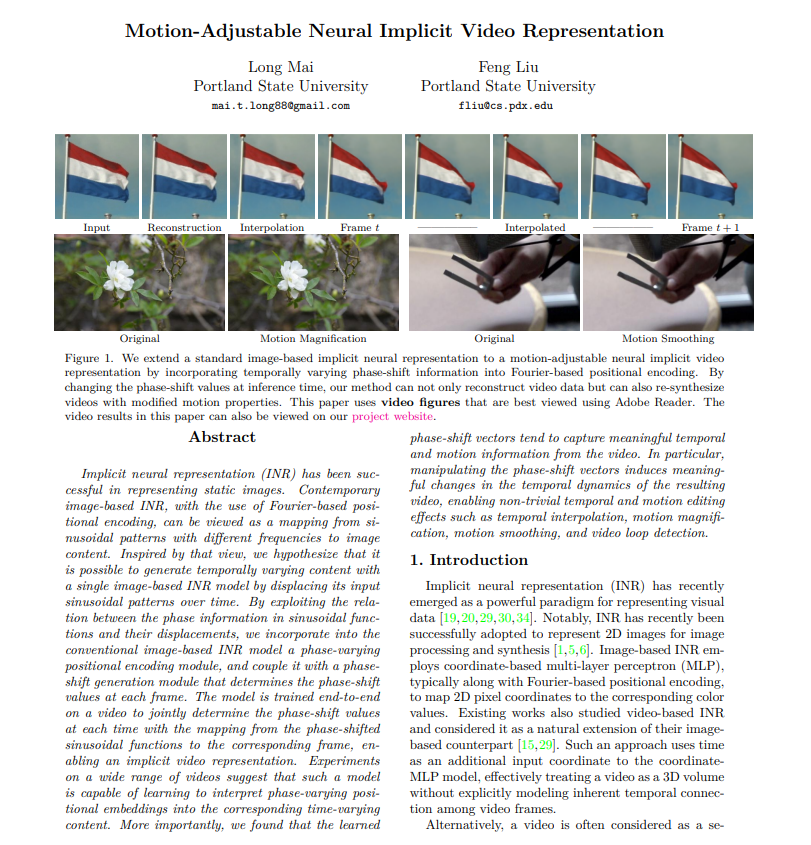

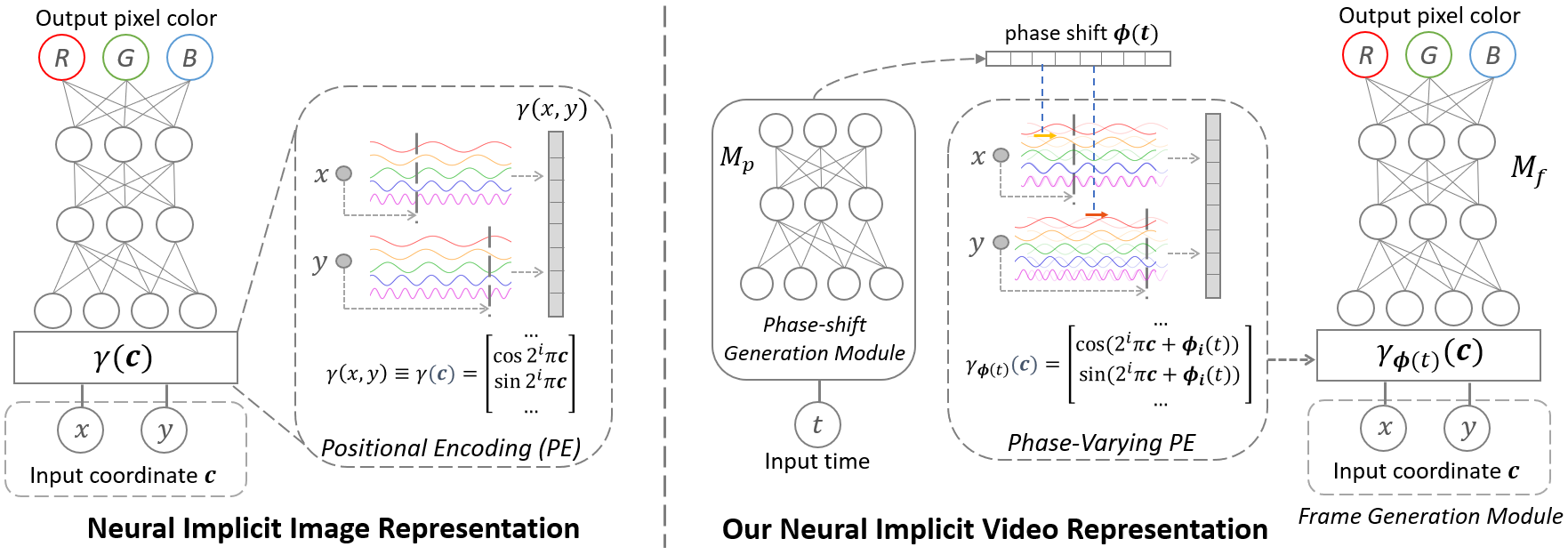

Implicit neural representation (INR) has been successfully adopted to represent static images. Contemporary image-based INR, with Fourier-feature-based positional encoding, can be viewed as a mapping from sinusoidal patterns with different frequencies to image content. Inspired by that view, we hypothesize that it is possible to generate temporally varying content with a single image-based INR model by displacing its input sinusoidal patterns over time. By exploiting the relation between the phase information in sinusoidal functions and their displacements, we incorporate into the conventional image-based INR model a phase-varying positional encoding module, and couple it with a phase generation module that determines the phase-shift values at each time frame. The model is trained end-to-end on a video sequence to jointly determine the phase shift values at each time point with the mapping from the phase-shifted sinusoidal functions to the corresponding frame, enabling an implicit video representation. Experiments on a wide range of video content suggest that such a model is capable of learning to interpret phase-varying input positional embeddings into the corresponding time-varying video content. More importantly, we found that the learned phase shift vectors capture meaningful temporal and motion information from the video. In particular, manipulating the learned phase vectors induces meaningful changes in the temporal dynamics of the resulting video, enabling non-trivial temporal and motion editing effects such as temporal interpolation, motion magnification, motion smoothing, and video loop detection.

Video

Approach

We extend image-based implicit neural representation

(left) to model a video. Our method determines the phase-shift at each time t using the phase-shift generation network

. The frame generation network

synthesizes the video frames corresponding to the positional embeddings with the

phase shifted by

. At inference time,

can be manipulated to generate new videos with modified dynamics.

Sample Results

Paper

Bibtex

If you find this work useful for your research, please cite:

@inproceedings{Mai_2022_PhaseNIVR,

title={{Motion-Adjustable Neural Implicit Video Representation}},

author={Mai, Long and Liu, Feng},

booktitle={CVPR},

year={2022},

}